I’m building a 2D/3D hybrid graphic design tool called Manifold. In this article I’m going to discuss the approach I’m taking in order to fit it into the workflow that I need for building games. I see this problem from a “recipe” perspective — what do I want the process to look like and what tools do I need to execute it.

In a commercial studio environment, there are dedicated teams of people committed to individual aspects of a game’s look — concept artists, modelers, animators, effects.. people, etc. As an Indie game developer, replicating those traditional processes seems outright impossible — simply due to time.

I don’t need the polished look and feel of commercial games like Far Cry 4. Using modern effects is one thing, but handcrafting a 3D scene with characters alone could wipe out a year or two of development… and that’s before the assets are ready for play testing, etc.

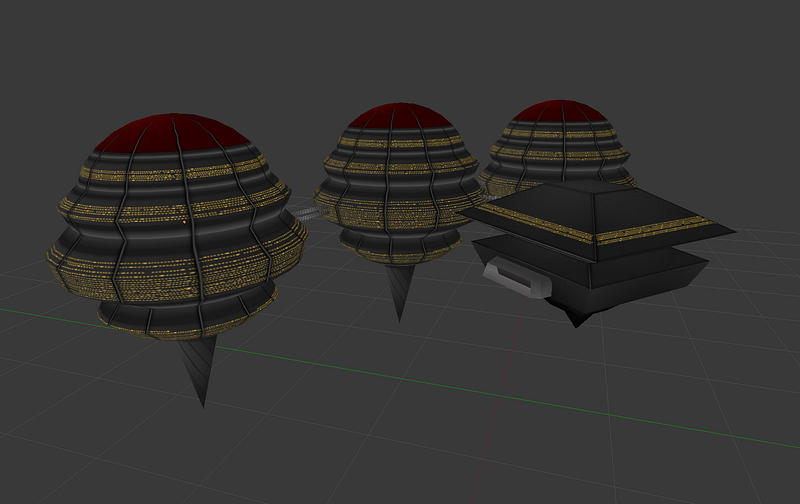

My production pipeline needs to be made of highly intuitive tools that help me rapidly prototype something out of sketches and even nothing (hello Alchemy). I would be happy with a PS2 era result that can be quickly made in a day or two of prototyping and polish.

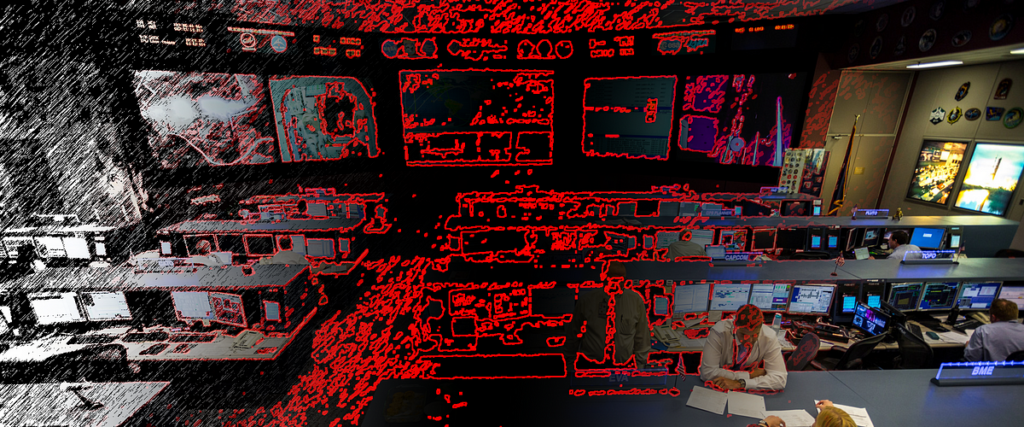

Combining edge detection techniques, vector editing and some well thought out procedural generation based tools should be what I need to get a basic functional version of Manifold running. Edge detection alone is a craft, almost a field of study (e.g. https://en.wikipedia.org/wiki/Canny_edge_detector), so it’s going to be interesting prototyping functionality while leaving things open for extensibility and change.

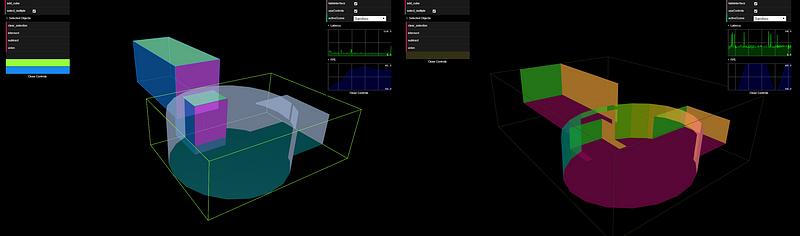

Once the shapes are found, a perspective and basic structure would need to be laid on top. These assistance tools are going to be the most annoying to develop and maintain due to the UI controller logic.. so coming up with a good UI framework out the door is going to be key.

The image below depicts a perspective grid as applied to an image. I can’t be bothered creating concept art so read carefully — imagine the red lines from the image above being “anchored” to a 3D scene perspective. Those individual shapes, or vectors, can then be extruded, rotated around a cylinder.. whatever is required to create the individual objects within the scene.

There are two commercial solutions today that cross into the functionality I’m talking about, but they are both highly specialized into different applications. There’s Xfrog which is designed for making plants and Houdini FX which is designed for complicated effects. Both have very powerful bulk editing tools for procedurally generated geometry, something that will be key to Manifold’s success.

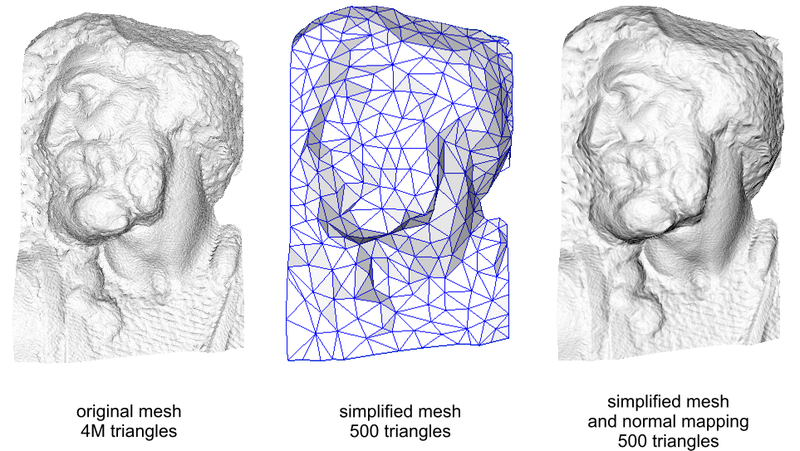

So basic modelling aside — I also want to be able to collapse complex surface and structural geometry into simpler shapes and definitely normal maps. Similar to using a clay modelling tool and re-forming structures from a technical model to an optimised visual one. This will be so important to making curved surfaces and natural shapes look correct.

I still don’t know what I’m going to do about texture painting. I think the best way to solve the problem is to define it first. The original Langenium approach to textures was to have tiled materials such as metal or glass that could be applied to any object… but this really limits what you can make look good. Modern 3D graphics tend to be more complicated.

Modelling seems to be more or less covered, there’s enough for me to design a UI and process. Before going to prototype though, texture painting and general painting tools are my next priority. I would like Manifold to fully replace Adobe Illustrator in my design process, across all projects.. even general web design. That may seem like a tall order but I also only use a fraction of the functionality within existing Illustrator and as a developer I know I can tailor make something that will do a better job for my needs. Besides.. drawing shapes, colouring them, using layer transparency and masking tools.. this is not cutting edge technology, unlike what I’ve had to wrap my head around for Langenium.

In my next article, I’m going to look at 2D drawing techniques and how I might leverage similarities in the processes required to drive stuff I need in 2D and 3D to reach a usable prototype sooner. I’m pursuing a design first approach until I reach a prototype, at which point I’ll let my own experiences with it drive the development process.